基础信息

- 内网客户端

- IP地址: 192.168.7.55/24

- 模拟外网客户端

- IP地址: 10.12.26.20/24

- 服务端 / RS

- IP地址: 192.168.7.27/24

- 负载均衡 / DPVS

- 内网 – DPDK0.kni

- IP地址1: 192.168.7.82/24 ( 内网管理IP )

- IP地址2: 192.168.7.182/32 ( 内网VIP, 用户态只挂载 )

- 掩码: 255.255.255.0 ( 24 )

- 网关: 192.168.7.20

- 外网 – DPDK1.kni

- IP地址1: 10.20.30.45/24 ( 对接OSPF )

- 掩码: 255.255.255.0

- 网关: 10.20.30.1

- IP地址2: 100.96.122.210 ( 模拟外网IP, 只需宣告自身地址 )

- 内网 – DPDK0.kni

- 交换机

- VLAN

- 内网: 700

- 外网客户端: 1226

- 外网OSPF: 2345

- VLAN

场景概览

场景一: 内网单臂负载均衡

实现概览

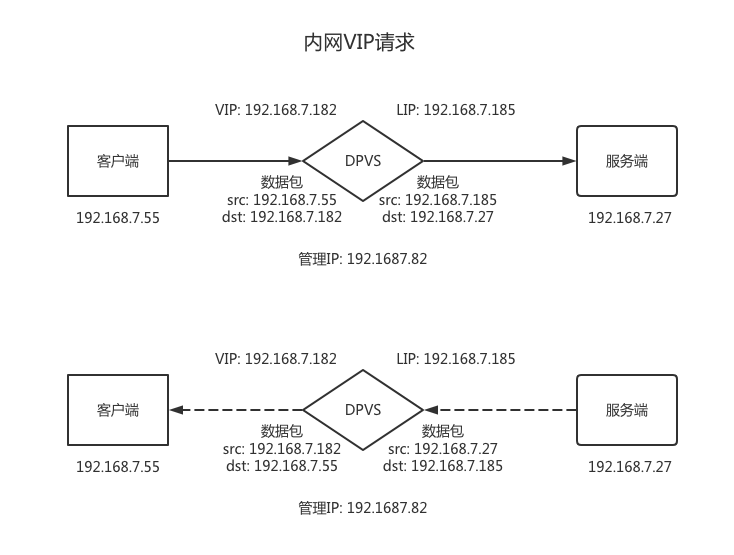

- 基于 keepalived 为 RS 提供四层负载均衡能力

- 客户端、DPVS、RS同属属于可通达子网,实现二层直接转发

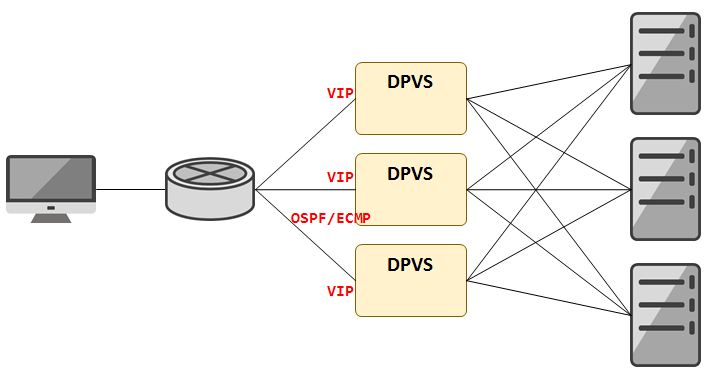

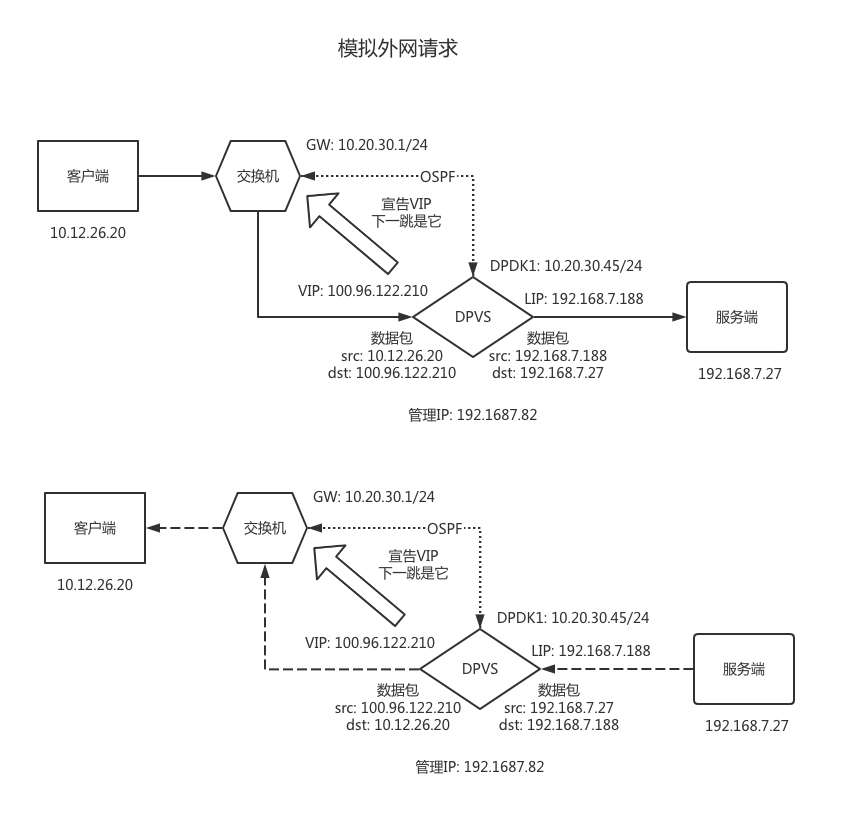

场景二: 外网双臂OSPF/ECMP负载均衡

实现概览

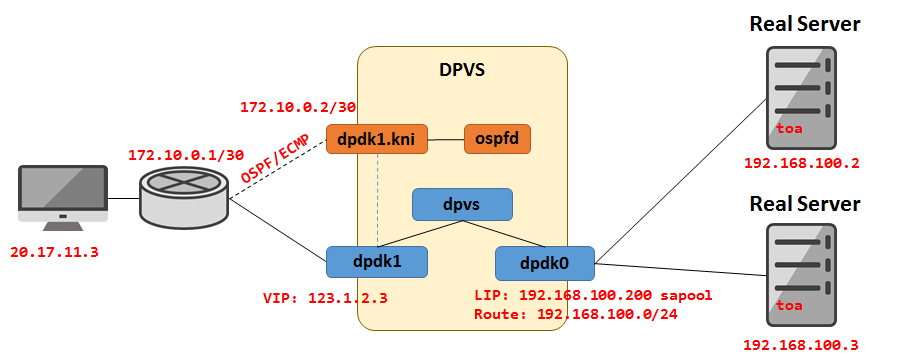

依靠 dpdk1.kni 网卡配置内网地址,与交换机建立 OSPF

在 DPVS 下给 dpdk1 网卡配置外网地址,并向宣发交换机,形成动态路由,导入外网流量

再通过 dpdk0 网卡上配置的 LIP 地址,向内网 RS 转发请求 ( 需确保 LIP 与 RS 在同一子网下,且路由可达 )

系统配置

需要开启内核IP转发 ( enable IP forwarding in the kernel )

echo "net.ipv4.conf.all.forwarding=1">>/etc/sysctl.conf

echo "net.ipv6.conf.all.forwarding=1">>/etc/sysctl.conf

sysctl -p场景一: 内网负载均衡

IP配置

此处已包含外网卡配置。

export DPDKDevBind="/usr/local/dpvs/dpdk-stable-20.11.1/usertools/dpdk-devbind.py"

# 加载网卡uio_pci_generic驱动

modprobe uio_pci_generic

# 加入rte_kni.ko驱动模块

insmod /usr/local/dpvs/dpdk-stable-20.11.1/dpdkbuild/kernel/linux/kni/rte_kni.ko carrier=on

# 将网卡切换为kni

ifconfig eno5 down

eth0_bus_info=`ethtool -i eno5 | grep "bus-info" | awk '{print $2}'`

${DPDKDevBind} -b uio_pci_generic ${eth0_bus_info}

ifconfig eno6 down

eth1_bus_info=`ethtool -i eno6 | grep "bus-info" | awk '{print $2}'`

${DPDKDevBind} -b uio_pci_generic ${eth1_bus_info}

# 启动内网卡

ip link set dpdk0.kni up

# 配置内网IP

/usr/local/dpvs/bin/dpip addr add 192.168.7.82/24 dev dpdk0

/usr/local/dpvs/bin/dpip addr add 192.168.7.182/32 dev dpdk0

# 配置内网路由

/usr/local/dpvs/bin/dpip route add 192.168.0.0/16 via 192.168.7.20 dev dpdk0

/usr/local/dpvs/bin/dpip route add 172.16.0.0/12 via 192.168.7.20 dev dpdk0

/usr/local/dpvs/bin/dpip route add 10.0.0.0/8 via 192.168.7.20 dev dpdk0

# 启动外网卡

ip link set dpdk1.kni up

# 配置OSPF内部IP,用于与上层交换机建立OSPF

/usr/local/dpvs/bin/dpip addr add 10.20.30.45/24 dev dpdk1

/usr/local/dpvs/bin/dpip route add default via 10.20.30.1 dev dpdk1

# 配置外网地址,承接外网流量

/usr/local/dpvs/bin/dpip addr add 100.96.122.210/32 dev dpdk1

ip addr add 192.168.7.82/24 dev dpdk0.kni

ip addr add 10.20.30.45/24 dev dpdk1.kni

# 将需要通信的VIP、外网地址添加至本地LO网卡,只做用户态数据包转换,实际数据包已由DPIP指定配置

# 如果添加至dpdk1,会有异常报文上报交换机,但不会有影响

ip addr add 192.168.7.182/32 dev lo

ip addr add 100.96.122.210/32 dev lo

route add -net 192.168.0.0/16 gw 192.168.7.20 dev dpdk0.kni

route add -net 172.16.0.0/12 gw 192.168.7.20 dev dpdk0.kni

route add -net 10.0.0.0/8 gw 192.168.7.20 dev dpdk0.kni

route add default gw 10.20.30.1 dev dpdk1.knikeepalived配置

请注意,此处的 keepalived 是由爱奇艺团队为 DPVS 专门修改过的,在 DPVS 默认配置编译安装后位于

/usr/local/dpvs/bin/keepalived.一些参数配置有经过针对 DPVS 特殊修改,与原生版本会有些许区别。

详见地址

启动脚本

/etc/init.d/keepalived

#!/bin/sh # # Startup script for the Keepalived daemon # # processname: keepalived # pidfile: /var/run/keepalived.pid # config: /etc/keepalived/keepalived.conf # chkconfig: 35 21 79 # description: Start and stop Keepalived # Global definitions PID_FILE="/var/run/keepalived.pid" # source function library . /etc/init.d/functions RETVAL=0 start() { echo -n "Starting Keepalived for LVS: " daemon /usr/local/dpvs/bin/keepalived -f /etc/keepalived/keepalived.conf -D RETVAL=$? echo [ $RETVAL -eq 0 ] && touch /var/lock/subsys/keepalived return $RETVAL } stop() { echo -n "Shutting down Keepalived for LVS: " /bin/bash /etc/keepalived/check_kp.sh killproc keepalived RETVAL=0 echo [ $RETVAL -eq 0 ] && rm -f /var/lock/subsys/keepalived return $RETVAL } reload() { echo -n "Reloading Keepalived config: " killproc keepalived -1 RETVAL=$? echo return $RETVAL } # See how we were called. case "$1" in start) start ;; stop) stop ;; restart) stop start ;; reload) reload ;; status) status keepalived ;; condrestart) [ -f /var/lock/subsys/keepalived ] && $0 restart || : ;; *) echo "Usage: $0 {start|stop|restart|reload|condrestart|status}" exit 1 esac exit 0权限配置

chmod 755 /etc/init.d/keepalived systemctl daemon-reload service keepalived status

配置文件

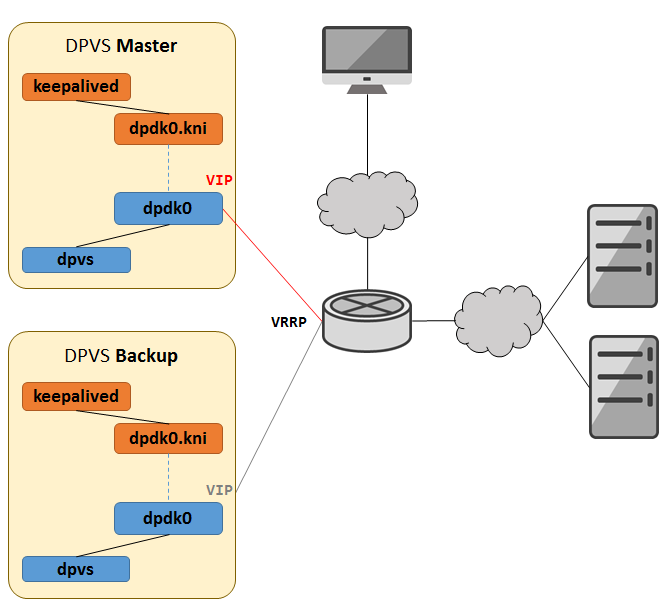

一般生产环境 DPVS 节点会采用双/多节点形式,借助ECMP实现路由流量负载均衡。

RS 依靠 DPVS 上的 keepalived 权重设置,实现业务流量负载均衡。

但 keepalived 的 VS 功能只能实现 RS 健康上下线,而无法实现路由流量在异常时下线,这是需要依靠 keepalived 的 VRRP 特性进行 DPVS 节点间心跳检查、执行脚本,曲线下线。

注意:

- VIP与RS的端口需要一一对应,即当请求 RS 的 80 端口时,客户端要请求 VIP 的 80 端口;

/etc/keepalived/keepalived.conf – keepalived运行配置

master 节点配置

# keepalived 主配置 ! Configuration File for keepalived global_defs { router_id DPVS_DEVEL } local_address_group laddr_g1 { # 转发IP组,需要与RS子网可通,以IP组里的IP向RS建连通信 192.168.7.185 dpdk0 # 可以增加多个转发IP,提高转发端口(local_port_range)上限问题 # 192.168.7.186 dpdk0 # 192.168.7.187 dpdk0 } vrrp_instance HA_INWEB { ##这项可以不需要,这里配置的目的是:当keepalived关闭时,执行脚本下掉交换机IP转发 state MASTER interface dpdk0.kni # should be kni interface dpdk_interface dpdk0 # should be DPDK interface virtual_router_id 210 # 确保子网内 VID 唯一 priority 100 # master's priority is bigger than worker advert_int 7 authentication { auth_type PASS auth_pass devweb210 } virtual_ipaddress { 192.168.7.210 ## 一组dpvs中的vip,由SA提供空闲IP } notify_stop /etc/keepalived/check_kp.sh ## vip核心配置,用于下线对外VIP路由,防止节点后异常仍有流量请求过来 } virtual_server 192.168.7.182 80 { # 定义从VIP的80端口接收请求 delay_loop 3 # 执行RS健康检测间隔,单位: 秒 lb_algo rr lb_kind FNAT protocol TCP syn_proxy laddr_group_name laddr_g1 # 从这个转发组ID与RS建连 # 如果所有RS检测失败,则运行脚本 quorum_down /etc/keepalived/check_rs.sh # 如有多个RS,则按格式扩展即可,配置块间无需符号分隔 ## 单个RS配置块释义 # 从VIP的80端口转到RS的80端口 real_server 192.168.7.27 80 { weight 100 TCP_CHECK { # 连接超时3秒,重试2次,重试间隔3秒 # 结合delay_loop配置,即持续最短9秒、最长12秒,会对RS下线 connect_timeout 3 nb_sock_retry 2 delay_before_retry 3 connect_port 80 } } }

backup 节点配置

本次只启用 master 节点测试。

注意以下几点:

- local_address_group

- 转发 IP 与 RS 子网需要可通,且不能与子网已有 IP 冲突

- vrrp_instance

- state 需要改为 BACKUP

- virtual_router_id 与 master 保持一致且子网唯一

- priority 优先级小于 master

- 其余配置与 master 保持一致即可

# keepalived 主配置 ! Configuration File for keepalived global_defs { router_id DPVS_DEVEL } local_address_group laddr_g1 { # 转发IP组,需要与RS子网可通,以IP组里的IP向RS建连通信 192.168.7.195 dpdk0 # 可以增加多个转发IP,提高转发端口(local_port_range)上限问题 # 192.168.7.196 dpdk0 # 192.168.7.197 dpdk0 } vrrp_instance HA_INWEB { ##这项可以不需要,这里配置的目的是:当keepalived关闭时,执行脚本下掉交换机IP转发 state BACKUP interface dpdk0.kni # should be kni interface dpdk_interface dpdk0 # should be DPDK interface virtual_router_id 210 # 确保子网内 VID 唯一 priority 90 # master's priority is bigger than worker advert_int 7 authentication { auth_type PASS auth_pass devweb210 } virtual_ipaddress { 192.168.7.210 ## 一组dpvs中的vip,由SA提供空闲IP } notify_stop /etc/keepalived/check_kp.sh ## vip核心配置,用于下线对外VIP路由,防止节点后异常仍有流量请求过来 } ...- local_address_group

/etc/keepalived/check_kp.sh – keepalived 服务层级VIP下线脚本

作用于节点上 keepalived 异常/关闭 时,下线所有对外VIP,避免业务流量仍请求到该节点。

#!/bin/bash ip addr del 192.168.7.182/32 dev lo ip addr del 100.96.122.210/32 dev lo/etc/keepalived/check_rs.sh – keepalived RS层级VIP下线脚本

作用于节点上单个 VS 组异常时,下线对外VIP,避免业务流量仍请求到该节点。

#!/bin/bash ip addr del 192.168.7.182/32 dev lo

启动 keepalived

service keepalived start

service keepalived status输出

● keepalived.service - SYSV: Start and stop Keepalived Loaded: loaded (/etc/rc.d/init.d/keepalived; bad; vendor preset: disabled) Active: active (running) since Sun 2021-12-26 17:07:38 CST; 2s ago Docs: man:systemd-sysv-generator(8) Process: 149429 ExecStart=/etc/rc.d/init.d/keepalived start (code=exited, status=0/SUCCESS) Main PID: 149448 (keepalived) CGroup: /system.slice/keepalived.service ├─149448 /usr/local/dpvs/bin/keepalived -f /etc/keepalived/keepalived.conf -D ├─149449 /usr/local/dpvs/bin/keepalived -f /etc/keepalived/keepalived.conf -D └─149450 /usr/local/dpvs/bin/keepalived -f /etc/keepalived/keepalived.conf -D Dec 26 17:07:38 homelab_inspur_node02 Keepalived_vrrp[149450]: Assigned address 192.168.7.82 for interface dpdk0.kni Dec 26 17:07:38 homelab_inspur_node02 Keepalived_healthcheckers[149449]: SECURITY VIOLATION - check scripts are being executed but script_security not enabled. Dec 26 17:07:38 homelab_inspur_node02 Keepalived_healthcheckers[149449]: Initializing ipvs Dec 26 17:07:38 homelab_inspur_node02 Keepalived_vrrp[149450]: Registering DPVS gratuitous ARP. Dec 26 17:07:38 homelab_inspur_node02 Keepalived_vrrp[149450]: (HA_INWEB) removing VIPs. Dec 26 17:07:38 homelab_inspur_node02 Keepalived_vrrp[149450]: (HA_INWEB) Entering BACKUP STATE (init) Dec 26 17:07:38 homelab_inspur_node02 Keepalived_vrrp[149450]: VRRP sockpool: [ifindex(7), family(IPv4), proto(112), unicast(0), fd(10,11)] Dec 26 17:07:38 homelab_inspur_node02 Keepalived_healthcheckers[149449]: Gained quorum 1+0=1 <= 100 for VS [192.168.7.182]:tcp:80 Dec 26 17:07:38 homelab_inspur_node02 Keepalived_healthcheckers[149449]: Activating healthchecker for service [192.168.7.27]:tcp:80 for VS [192.168.7.182]:tcp:80 Dec 26 17:07:39 homelab_inspur_node02 Keepalived_healthcheckers[149449]: TCP connection to [192.168.7.27]:tcp:80 success.启动成功,与 RS 连接正常。

注意:

启动 keepalived 是不会添加系统IP和路由

意味着如果没有提前配置系统层 IP 、路由,会造成 keepalived 无法处理网络请求。

查看 DPVS 下的 IPVS 状态

由于 DPVS 接管了底层网络栈,所以 keepalived 的 LVS 转发是由 DPVS 改过后的 IPVS 模块进行处理。

如果使用系统原生的 IPVS 是无法查看该连接状态。

同时也说明了,当 DPVS 启动接管网络栈后,本机的 iptables 将无法处理网络包。

[root@localhost ~]# /usr/local/dpvs/bin/ipvsadm -Ln

IP Virtual Server version 0.0.0 (size=0)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.7.182:80 rr synproxy

-> 192.168.7.27:80 FullNat 100 0 0可以看到 RS 已正常上线,转发模式为 FullNat .

给 RS 安装 TOA 模块

未安装 TOA 模块时,经过 DPVS FullNat 请求到 RS 后,服务端只能看到 LIP 的转发信息,并认为 LIP 就是真实用户IP.

192.168.7.185:1089 80 - [26/Dec/2021:17:29:01 +0800] "GET /echo HTTP/1.1" 200 19 81 "-" "curl/7.29.0" - - - - 0.000 192.168.7.182 "- - -" "192.168.7.185"

192.168.7.185:1091 80 - [26/Dec/2021:17:29:02 +0800] "GET /echo HTTP/1.1" 200 19 81 "-" "curl/7.29.0" - - - - 0.000 192.168.7.182 "- - -" "192.168.7.185"本次采用华为的TOA模块

https://github.com/Huawei/TCP_option_address

注意:

在内核 5.4 下会出现编译失败

测试机内核版本为: 4.4.215

编译安装

cd /usr/local/src wget https://github.com/Huawei/TCP_option_address/archive/master.zip unzip master.zip rm -f master.zip cd TCP_option_address-master make加载模块

insmod /usr/local/src/TCP_option_address-master/toa.ko # 验证加载状态 lsmod | grep toa toa 16384 0 # 移除模块 rmmod toa加入开机启动项

echo "/sbin/insmod /usr/local/src/TCP_option_address-master/toa.ko" >> /etc/rc.local

安装后再次请求,可以正确读取用户信息

192.168.7.55:57462 80 - [26/Dec/2021:17:36:31 +0800] "GET /echo HTTP/1.1" 200 19 81 "-" "curl/7.29.0" - - - - 0.000 192.168.7.182 "- - -" "192.168.7.55"192.168.7.55:57464 80 - [26/Dec/2021:17:36:32 +0800] "GET /echo HTTP/1.1" 200 19 81 "-" "curl/7.29.0" - - - - 0.000 192.168.7.182 "- - -" "192.168.7.55"访问测试

服务端配置

服务端为 Nginx ,本次采用简单返回配置,证明服务可用即可达到效果。

test.conf

server { listen 80; charset utf-8; access_log logs/test.nestealin.com.access.main.log main; error_log logs/test.nestealin.com.error.log error; include firewall.conf; location /echo { return 200 'RS-7.27 is available.' ; }}

客户端访问测试

homedev_root >> ~ # for i in {1..10} ;do curl "http://192.168.7.182/echo" ; echo'' ; sleep 1 ;done RS-7.27 is healthy. RS-7.27 is healthy. RS-7.27 is healthy. RS-7.27 is healthy. RS-7.27 is healthy. RS-7.27 is healthy. RS-7.27 is healthy. RS-7.27 is healthy. RS-7.27 is healthy. RS-7.27 is healthy.查看 IPVS 连接状态

[root@localhost ~]# /usr/local/dpvs/bin/ipvsadm -Lnc [2]tcp 7s TIME_WAIT 192.168.7.55:57090 192.168.7.182:80 192.168.7.185:1097 192.168.7.27:80 [2]tcp 7s TIME_WAIT 192.168.7.55:57092 192.168.7.182:80 192.168.7.185:1099 192.168.7.27:80 [2]tcp 7s TIME_WAIT 192.168.7.55:57088 192.168.7.182:80 192.168.7.185:1095 192.168.7.27:80 [2]tcp 7s TIME_WAIT 192.168.7.55:57078 192.168.7.182:80 192.168.7.185:1089 192.168.7.27:80 [2]tcp 7s TIME_WAIT 192.168.7.55:57086 192.168.7.182:80 192.168.7.185:1093 192.168.7.27:80 [2]tcp 7s TIME_WAIT 192.168.7.55:57084 192.168.7.182:80 192.168.7.185:1091 192.168.7.27:80 [2]tcp 7s TIME_WAIT 192.168.7.55:57094 192.168.7.182:80 192.168.7.185:1101 192.168.7.27:80在 192.168.7.182:80 接收到 192.168.7.55:57086 请求

并以 192.168.7.185:1093 向 RS 192.168.7.27:80 转发数据包

Nginx 处理后,根据请求表,原路返回给客户端。

验证有效。

至此,FullNat模式的内网负载均衡已经完成配置。

场景二: 外网负载均衡

- 网卡基础配置仍与场景一相同,以下主要说明外网相关配置。

- 服务端配置仍为 Nginx echo 请求

由于模拟外网的客户端 IP 是 10.12.26.20 , 所以要去掉 dpdk0.kni 上的 10.0.0.0/8 路由, 避免数据包仍由 dpdk0 回包造成网络数据包不能原进原出。

route del -net 10.0.0.0/8 dev dpdk0.kniOSPF配置

本次采用 FRR 工具与交换机建立 OSPF 。

安装 FRR / FRRouting

官网地址:https://rpm.frrouting.org/

按系统需求进行下载与安装

# possible values for FRRVER: frr-6 frr-7 frr-8 frr-stable# frr-stable will be the latest official stable releaseFRRVER="frr-stable"# add RPM repository on CentOS 6curl -O https://rpm.frrouting.org/repo/$FRRVER-repo-1-0.el6.noarch.rpmsudo yum install ./$FRRVER*# add RPM repository on CentOS 7curl -O https://rpm.frrouting.org/repo/$FRRVER-repo-1-0.el7.noarch.rpmsudo yum install ./$FRRVER*# add RPM repository on CentOS 8curl -O https://rpm.frrouting.org/repo/$FRRVER-repo-1-0.el8.noarch.rpmsudo yum install ./$FRRVER*# install FRRsudo yum install frr frr-pythontools服务端配置

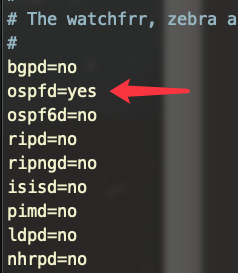

启用 ospf 模块

修改配置文件,将 osfpd 状态改为 yes .

vi /etc/frr/daemons

启动 frr

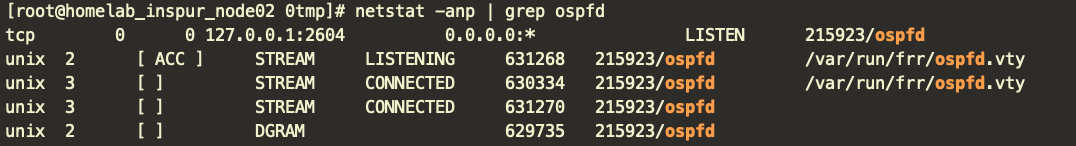

service frr start确认启动状态

netstat -anp | grep ospfd

进入终端

# 进入终端

[root@homelab_inspur_node02 0tmp]# vtysh

Hello, this is FRRouting (version 8.1).

Copyright 1996-2005 Kunihiro Ishiguro, et al.

# 查看当前配置

homelab_inspur_node02# show running-config

Building configuration...

Current configuration:

!

frr version 8.1

frr defaults traditional

hostname homelab_inspur_node02

log syslog informational

no ipv6 forwarding

!

end开始配置

homelab_inspur_node02# configure terminal

homelab_inspur_node02(config-router)# router ospf

# 宣发 dpdk1 上的内网段,area 要与交换机一致,用于建连OSPF

homelab_inspur_node02(config-router)# network 10.20.30.0/24 area 0

# 宣发本机 "外网"VIP 地址,让交换机在建连OSPF后知道VIP下一跳在这台机

homelab_inspur_node02(config-router)# network 100.96.122.210/32 area 0

# 保存配置

homelab_inspur_node02(config-router)# do write file完成服务器端 OSPF 配置。

交换机配置

建立网关

sys

interface vlanif 2345

# 设置网关

ip address 10.20.30.1 255.255.255.0划分端口 vlan

interface XGigabitEthernet0/0/3

port link-type trunk

port trunk pvid vlan 2345

port trunk allow-pass vlan 2 to 4094

stp disable

stp edged-port enable配置OSPF

# 创建 OSPF id 为 2 ,路由ID为 0.0.0.2[NesHomeLab-S5720]ospf 2 router-id 0.0.0.2# 查看初始配置[NesHomeLab-S5720-ospf-2]dis this#ospf 2 router-id 0.0.0.2#return# 进入 area 0 ,需要与 DPVS 主机同区域[NesHomeLab-S5720-ospf-2]area 0# 宣告 OSPF 建连子网 10.20.30.0/24 需要反掩码[NesHomeLab-S5720-ospf-2-area-0.0.0.0]network 10.20.30.0 0.0.0.255# 查看路由宣发情况[NesHomeLab-S5720-ospf-2-area-0.0.0.0]dis this# area 0.0.0.0 network 10.20.30.0 0.0.0.255#return[NesHomeLab-S5720-ospf-2-area-0.0.0.0]q# 查看建立状态,已经发现邻居[NesHomeLab-S5720-ospf-2]dis ospf peer OSPF Process 1 with Router ID 0.0.0.1 OSPF Process 2 with Router ID 0.0.0.2 Neighbors Area 0.0.0.0 interface 10.20.30.1(Vlanif2345)'s neighbors Router ID: 192.168.7.82 Address: 10.20.30.45 State: Full Mode:Nbr is Master Priority: 1 DR: 10.20.30.45 BDR: 10.20.30.1 MTU: 1500 Dead timer due in 37 sec Retrans timer interval: 4 Neighbor is up for 00:00:11 Authentication Sequence: [ 0 ]检查发送路由

<NesHomeLab-S5720>dis ospf routing interface Vlanif 2345 OSPF Process 1 with Router ID 0.0.0.1 OSPF Process 2 with Router ID 0.0.0.2 Destination : 10.20.30.0/24 AdverRouter : 0.0.0.2 Area : 0.0.0.0 Cost : 1 Type : Transit NextHop : 10.20.30.1 Interface : Vlanif2345 Priority : Low Age : 00h00m00s Destination : 100.96.122.210/32 AdverRouter : 192.168.7.182 Area : 0.0.0.0 Cost : 1 Type : Stub NextHop : 10.20.30.45 Interface : Vlanif2345 Priority : Medium Age : 00h00m55s可以看到 “外网IP” 100.96.122.210 交换机已经收到,并且下一跳为 10.20.30.45 .

keepalived配置

基于原有配置,增加一组外网配置

去除重复配置,新增转发组 laddr_g2 , VS监听配置

...

# 外网转发组,100.96.122.210:80 进来的流量将由 192.168.7.188 转发给RS

local_address_group laddr_g2 {

192.168.7.188 dpdk0

}

...

virtual_server 100.96.122.210 80 { # 定义从VIP的80端口接收请求

delay_loop 3 # 执行RS健康检测间隔,单位: 秒

lb_algo rr

lb_kind FNAT

protocol TCP

syn_proxy

laddr_group_name laddr_g2 # 从这个转发组ID与RS建连

# 如果所有RS检测失败,则运行脚本

quorum_down /etc/keepalived/check_rs.sh

# 如有多个RS,则按格式扩展即可,配置块间无需符号分隔

## 单个RS配置块释义

# 从VIP的80端口转到RS的80端口

real_server 192.168.7.27 80 {

weight 100

TCP_CHECK {

# 连接超时3秒,重试2次,重试间隔3秒

# 结合delay_loop配置,即持续最短9秒、最长12秒,会对RS下线

connect_timeout 3

nb_sock_retry 2

delay_before_retry 3

connect_port 80

}

}

}Reload 更新 keepalived 配置

service keepalived reload

查看 DPVS 下的 IPVS 状态

[root@localhost ~]# /usr/local/dpvs/bin/ipvsadm -Ln

IP Virtual Server version 0.0.0 (size=0)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 100.96.122.210:80 rr synproxy

-> 192.168.7.27:80 FullNat 100 0 0

TCP 192.168.7.182:80 rr synproxy

-> 192.168.7.27:80 FullNat 100 0 0可以看到 “外网入口” 的 RS 也已正常上线,转发模式为 FullNat .

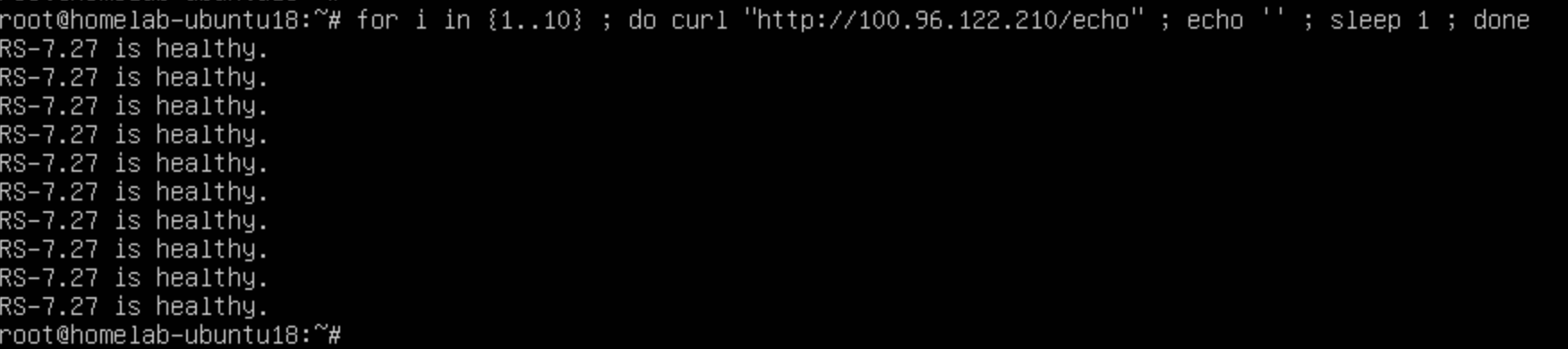

访问测试

- 客户端请求

查看 IPVS 转发状态

[root@localhost ~]# /usr/local/dpvs/bin/ipvsadm -Lnc [2]tcp 7s TIME_WAIT 10.12.26.20:60086 100.96.122.210:80 192.168.7.188:1031 192.168.7.27:80 [2]tcp 7s TIME_WAIT 10.12.26.20:60084 100.96.122.210:80 192.168.7.188:1029 192.168.7.27:80 [2]tcp 7s TIME_WAIT 10.12.26.20:60080 100.96.122.210:80 192.168.7.188:1025 192.168.7.27:80 [2]tcp 7s TIME_WAIT 10.12.26.20:60082 100.96.122.210:80 192.168.7.188:1027 192.168.7.27:80在 100.96.122.210:80 接收到 10.12.26.20:60086 请求

并以 192.168.7.188:1031 向 RS 192.168.7.27:80 转发数据包

Nginx 处理后,根据请求表,原路返回给客户端。

验证有效。

至此,FullNat模式的外网负载均衡已经完成配置。

存在问题

- 默认路由走外网卡,且只建有限路由,导致 DPVS 本机无法访问外网